| Uploader: | Nichiai |

| Date Added: | 11.08.2015 |

| File Size: | 31.81 Mb |

| Operating Systems: | Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X |

| Downloads: | 21190 |

| Price: | Free* [*Free Regsitration Required] |

windows - download a file from a website with batch - Stack Overflow

Yes, that method was used as the basis of what I am trying to do here, but when I typed up that one, it would not download anything. I uderstand what he is trying to do, but his method of splitting the source code to grab the links seems inefficient, yet without using his method, it is difficult for me to scavenge some of the useful code to better my own (lack of proficiency on my part. 3/26/ · PowerShell became a default app in Windows with Windows 8. It’s not a replacement for Command Prompt but it can do things that Command Prompt can’t, and more often it’s easier to use. A common example of this is downloading files. 5/1/ · All the info and main cmdlet of the script is Invoke-WebRequest, Which fetch information from web site. Once script is execution is complete, all files are downloaded, you can view the download folder, I further drilled down folders and viewed, files they are there. Download this script here, it is also available on blogger.com

Windows script to download files from website

Does anyone know how I could make a script that would go to a given web address and download a text file from that address and then store it at a given location?

Say: website. It would need to run daily, and capture about 18 files. Right now I get to go to the site daily, and download all 18 files and well Web scraping seems to be a hot topic these days. If you want to do simple scripting, it should be fairly simple using the Internet Explorer object. In Cthis would be trivial using HttpWebClient and its ilk. Perl also has an HTTP library with this functionality, can't remember the name of it off the top of my head.

I believe Python is fairly straightforward as well Lots of options! Sounds like wget is just what you need. Oh well. No ftp access, and if at all possible, the script needs to be written from hand.

So I really can't use any third party programs to get the data local security policies and that crap prevent it, though I suppose understandibly so. I don't suppose I can do it with a DOS batch file? I was using a batch file to drive the rest of the program my other post about moving to a given network location and opening the folder with the current date. Basically I'd go to our reports website, then download the report, and then move to a folder on the network with today's date, and dump the report there.

From that point I do other things with the reports which are already automated. Is there any way to get that using the CMD.

Sadly I don't get to use any compilers so Visual Studio, or even C and the such are out of my reach from work. At any rate, you should be able to use wget in a DOS batch file without any trouble at all. Your call might look something like this assuming wget is in your path : wget -q website.

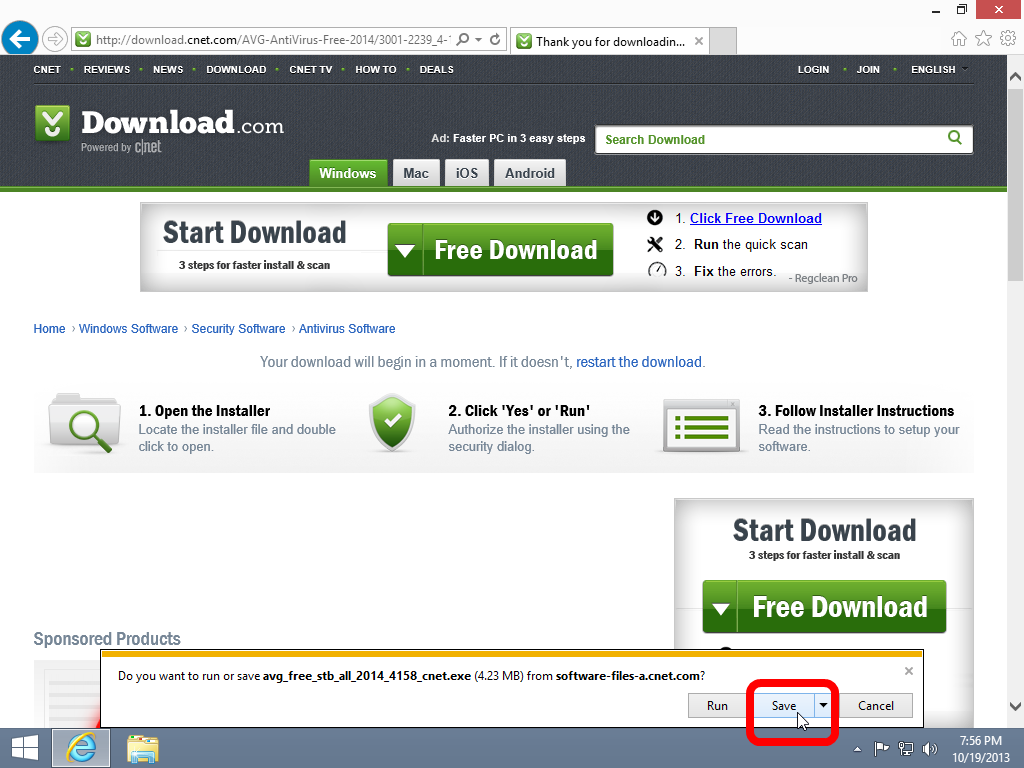

I've used wget in the past, but like I said I can't use ANY 3rd party programs, so I have to do it all from the ground up. I just need some way for my script to select "SAVE" and then say where to save it at.

It might help with some direction. You mention not having access to any compilers. But that shouldn't prevent you from using an already compiled executable. Due to security policies at the organization in which I work. We can't install or run any non-licensed or inspected software. Sadly wget would not be allowed to be run.

Long story short, any tools I want to use that we don't already have I have to build with my bare hands. Building wget or curl from source does not count? Why not? Primarily because they would have no idea what it was doing in the source, and honestly, I doubt I would either. More to the point even if they would let me compile it, I would have no way to do so, windows script to download files from website.

I have no tools on the computer other than Windows and Office. Strange that you have an internet connection there. Usually, windows script to download files from website, when security is as high as you describe, you can only access the intranet. Anyway, you really only have to open a socket to port 80 or your proxy's port and send an http request as seen in koala's post.

You should provide the "Host: www. You could always use a little VBScript. I don't make the rules, I just have to work here, windows script to download files from website. Tried telnet, no dice sadly. The server won't let me connect windows script to download files from website it.

I wonder what IE is doing that telnet isn't, or perhaps can't. I tried the base part of the URL, but no dice. It seems to authenticate through some server side java program or the such. Try this script. It works for me for a basic site. Didn't try anything with the authentication stuff.

ScriptName, ". Write inputText objOutputFile. The output is saved as a. You can tell because some objects are dimmed and some aren't. Also, I never program in vbscript, so if something looks crappy in the code, windows script to download files from website, it's because it's crappy.

In this case you probably have to use a proxy server. Look at IE's settings. Has anyone recommended wget? Web; using System. Net; using System. ReadToEnd ; r. Close ; webresponse. Every company I've ever worked for has had such a policy, but none of them ever actually enforce it.

I would recommend you ask your boss if you can install wget. If he says no, then the above will work for you. Well I figured a bit more out about what is happening. The reports are housed on the company's intranet, and it looks like there is a Java scriptlet that is called by the URL. So I have to go to the entire URL at windows script to download files from website start to get the file. So in IE when I paste reports. I'm sure wget will work just fine, and at this point I'm seriously considering using it.

Already sent an e-mail to our IT guys to wait for their blessing. I don't think it will be possible to get the files using a script sadly. So if one WERE to use wget, how would one go about telling it which directory to save a file in. I can't seem to find that in the documentation? EDIT: More to the point, wget fails to connect to the website.

It resolves reports. It will always connect to reports. It seems to me that you don't know enough about HTTP in order to make this work even though it is probably quite easy. My advice: learn more about URLs and the http protocol and find out what really happens, use telnet for proof of concept, then create a script. If you are lazy, use a sniffer like ethereal on your computer.

Can you be a little more specific here? But that's assuming your authentication is based on IP, or some sort of input forms, basic authsomething that isn't too outlandish. As long as you can eventually get to the report without some weird, say, ActiveX control just throwing that out therethen it should be fairly easy.

Good luck! That's the thing, I windows script to download files from website can't post the URL. I do know that it passes it to a Java scriptlet, and unless that Java portion is passed all the data it needs, you get a denied error. Following the URL is a servlet? Then following those windows script to download files from website the rest of the detail narrowing it down to which file the user is requesting.

Because I don't need to know what that is. So based on what you've said it would seem that you go to some URL like reports. Again, the Windows script to download files from website module is very handy in this case.

Let me know and I'll explain how to do it. GET variables are appended to a url with a? NET framework and the, windows script to download files from website. This will create an ArsHelp executable you can run.

Text; using System. ReadToEnd ; WriteFile filename, response ; r.

How to Download Any File from a Website

, time: 3:17Windows script to download files from website

Yes, that method was used as the basis of what I am trying to do here, but when I typed up that one, it would not download anything. I uderstand what he is trying to do, but his method of splitting the source code to grab the links seems inefficient, yet without using his method, it is difficult for me to scavenge some of the useful code to better my own (lack of proficiency on my part. 3/31/ · Script to download a file from a website automatically 54 posts • put it in a batch file and schedule it in windows. Maxer. I don't think it will be possible to get the files using a. I have a list of urls (over ) of patch files from various sources that I need to download. I need to create a batch script that will download these files and dump them all into the same directory. I do not have administrative privileges on the system I need to download to, so it needs to be batch or similer solution.

No comments:

Post a Comment